The Swiss Cheese Method

We've covered a lot of ground in this guide. Unit tests, fuzz tests, invariants, formal verification and more. But the thing that keeps most of the devs up at night is the fact that no single testing method is perfect.

During Devconnect 2022 at Istanbul, I was chatting with Farhaan, smart contracts tech lead for Maple Finance about various testing practices and that's when he shared about the Swiss cheese model. So I started reading that and exploring how to apply it for smart contract testing.

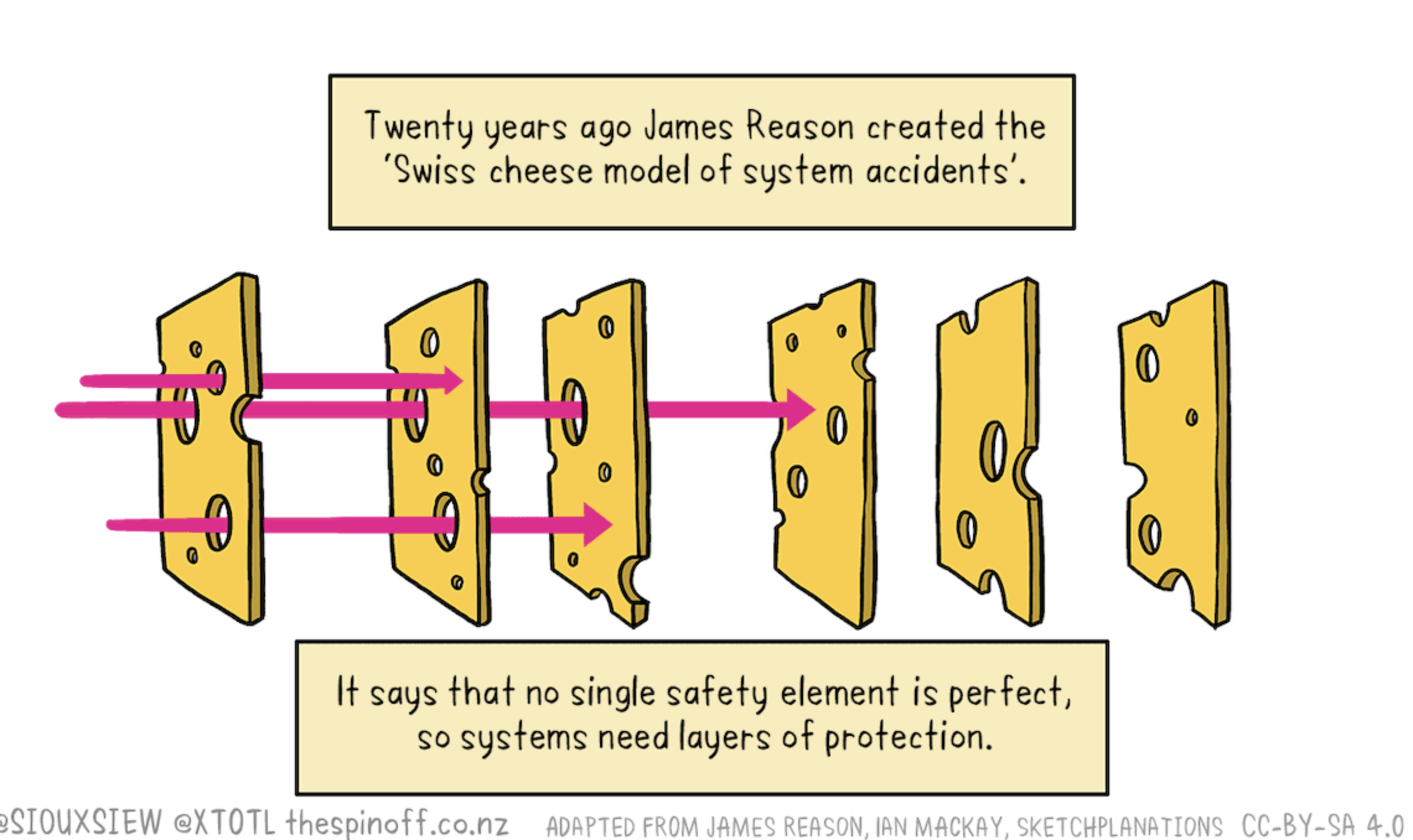

The Swiss Cheese model comes from aviation safety, but it applies to software security as well. Picture a bunch of slices of Swiss cheese stacked together. Each slice represents a different testing or security technique, and the holes in the cheese are the bugs and vulnerabilities that slip through.

Here's the idea: the holes in each slice are in different places. So even though your unit tests might miss something, your integration tests might catch it. And if both of those miss it, maybe your invariant testing might flag it. Or your auditor will spot it. Or your monitoring will catch it in production.

When you stack enough slices together, with the holes in different spots, it becomes really hard for a bug to slip through all the layers. It's not about making any single layer perfect, it's about having enough layers that the odds of everything aligning just right for a bug to sneak through become tiny.

Why This Matters?

Smart contracts are also software with a difference that they handle real money, and they're under constant attack. A single missed bug can cost millions. Remember the DAO? The Ronin bridge exploit? These weren't cases of bad developers – they were good devs who just didn't catch everything.

The problem is that different types of bugs need different techniques to find them. Logic errors show up differently than arithmetic issues. Upgrade problems and access control bugs are different. Economic exploits are completely different from technical vulnerabilities.

If you only rely on basic testing techniques, you're basically hoping that the holes in that one slice of cheese happen to not align with where the bugs are.

Balancer v2 suffered a $100 million exploit even after several audits and testing. So no code is 100% safe out there.

How the Layers Work Together

Here's how different layers can safe guard against different issues:

Layer 1: Basic Testing

-

Unit tests catch basic logic errors and obvious bugs. They're your first line of defense and they're fast to run, so you run them constantly during development. But they test things in isolation, which means they might miss how components interact.

-

Integration tests pick up where unit tests leave off. They catch issues that only appear when multiple contracts talk to each other. Like when your vault contract trusts the price oracle a bit too much, or when the token contract's callback behavior breaks your accounting.

-

Fork testing against mainnet state catches integration issues with real protocols and real conditions. Your protocol might work perfectly in your test environment but can break when interacting with the actual state of Aave or Uniswap.

Layer 2: Property-Based Testing

-

Fuzz testing throws random concrete values at your functions and finds those edge cases you never thought about. Like what happens when someone passes in

uint256.maxor zero or some weird number that causes an overflow, etc., -

Invariant testing (stateful fuzzing) runs sequences of random actions and checks that your system's fundamental rules always hold true. This catches bugs that only appear after a specific sequence of events.

Layer 3: Advanced Testing

-

Lifecycle and Scenario Tests simulate the full lifetime of your protocol. They ensure that the contract behaves correctly over time, especially as it moves through different states and handles a sequence of operations that might occur during its lifespan.

-

Mutation Tests change your code to introduce bugs, then check if your tests catch them. If your tests still pass after you flip a critical condition, your tests aren't good enough. This tests the quality of your tests themselves.

-

Differential Testing implements the same logic in different languages or different ways and checks they give the same results. Useful for complex math where you can write a simpler reference implementation in Python or Typescript and compare against your optimized Solidity version.

-

Formal verification & Symbolic Testing mathematically proves certain properties, which is important for critical calculations. But it's expensive and time-consuming, so you use it for the really critical bits like your accounting logic or access control.

Layer 4: Post deployment

-

External Audits

-

Monitoring watches your contracts in production. Critical monitoring alerts you when something's wrong. Informational monitoring tracks normal behavior so you can spot anomalies.

-

Bug Bounty Programs - This is important to make sure security researches keep scanning your code for potential vulnerabilities.

-

Red Teaming - Internally form teams and attempt to exploit your protocol like real attackers would. This could help you find attack paths you didn't consider during development.

The layers go from fast/cheap/automated (Layer 1) to slow/expensive/manual (Layer 4). You run Layer 1 constantly, Layer 2 regularly, Layer 3 before major releases, and Layer 4 is ongoing throughout the protocol's lifetime.

What the Swiss Cheese model tells us is that we should be intentional about which layers we use and understand what each layer does and doesn't catch. For a simple contract, maybe unit tests plus fuzz testing plus an audit is enough. For a complex protocol, you might want the full stack.

The key is that you shouldn't put all your faith in any single layer. I've seen teams that think "we have 100% code coverage!" and ship with confidence, only to get rekt because coverage doesn't catch logic errors. I've seen teams that think "we had three audits!" and still get exploited because audits don't catch economic attacks that span multiple blocks.

Keep updating your test suite

One more thing that's crucial to understand: this isn't a one-and-done thing. The Swiss Cheese model is ongoing. You deploy with multiple layers of defense, but those layers need maintenance.

Your test suite needs to grow as you add features. Your monitoring needs to adapt as attack patterns evolve. Your invariants need updating when your system's fundamental rules change. When you do an upgrade, you need to run your whole testing stack again.

And here's something that doesn't get talked about enough: the different layers inform each other. When your monitoring catches something weird in production, that should feed back into your test suite. When an auditor finds an issue, that should inform what your invariant tests check for. When fuzz testing finds an edge case, that should become a unit test.

Accepting Imperfection

The hardest part about the Swiss Cheese model might be psychological. It requires accepting that none of your testing will be perfect. Your tests will have bugs. Your auditors will miss things. Your monitoring will have blind spots.

But that's okay. That's the whole point. By accepting that each layer is imperfect but valuable, you build a system that's much stronger than if you'd tried to perfect any single layer.

I've seen protocols that were "only" 90% tested according to the metrics, but they used a good mix of unit tests, integration tests, fuzzing, invariants, and had multiple audits plus monitoring. They've been running in production for years without issues.

Your Testing Philosophy

At the end of the day, the Swiss Cheese model is really about developing a mature testing philosophy. It's about understanding that security and correctness aren't binary states you achieve – they're ongoing efforts requiring multiple complementary approaches.

Every technique we've covered in this guide – from basic unit tests to advanced formal verification – is a slice of cheese. Some slices are thicker than others. Some are more expensive to add to your stack. Some fit better with certain types of projects.

Your job as a developer is to understand what each technique brings to the table, where its blind spots are, and how to combine techniques to cover each other's weaknesses. That's what building confidence in your system really means.

Not that you've eliminated all possible bugs – that's impossible. But that you've done your due diligence across multiple dimensions, and that you have monitoring and response mechanisms in place for the things that might slip through.

Moving Forward

So where do you go from here? Start by auditing your current testing approach. Be honest about what you're doing and what you're not. Identify the gaps. Think about what types of bugs your current testing would miss.

Then start filling in those gaps. You don't need to implement everything at once. Add fuzzing to your test suite. Write some invariants. Set up fork testing. Each layer you add makes your system more robust.

The ecosystem is constantly evolving. New attack vectors emerge. New testing tools become available. Keep learning, keep adapting, and keep stacking those slices of cheese.

Because at the end of the day, building secure smart contracts isn't about being perfect. It's about being thorough, thoughtful, and humble enough to know that you need multiple perspectives and approaches to build something that can be trusted with people's money.

Stay safe out there, and happy testing!