Introduction

As the backbone of numerous financial applications on the blockchain, writing code in Solidity and challenging at the same time. Our goal, as developers, is not just to write code, but to write code that you can trust – confidently.

In recent times, Solidity has become a hotspot for developers, many of whom are just starting with their programming journey. As most smart contracts handle sensitive financial transactions touching user's funds, that doesn't seem good. Well experienced developers usually bring a security-first mindset to their coding practices. For newcomers without any prior experience, understanding the intricacies of secure and reliable coding in Solidity can be quite challenging.

This is where this guide comes in. This handbook has been crafted to be a companion in navigating the fundamentals of testing Solidity smart contracts. I understand that there are multiple guides out there, but the resources are scattered. This handbook doesn't dive deep into specific testing pattern, but this should serve as a very good starting point to understand specific testing patterns and best practices. It doesn't stop at the basics tho. This guide also walks you through advanced strategies like mutation testing and the branching tree technique, helping you understand when and how to apply them.

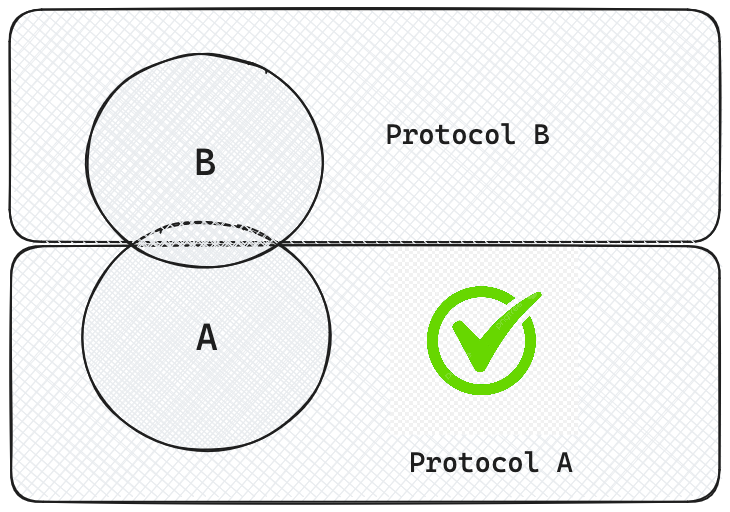

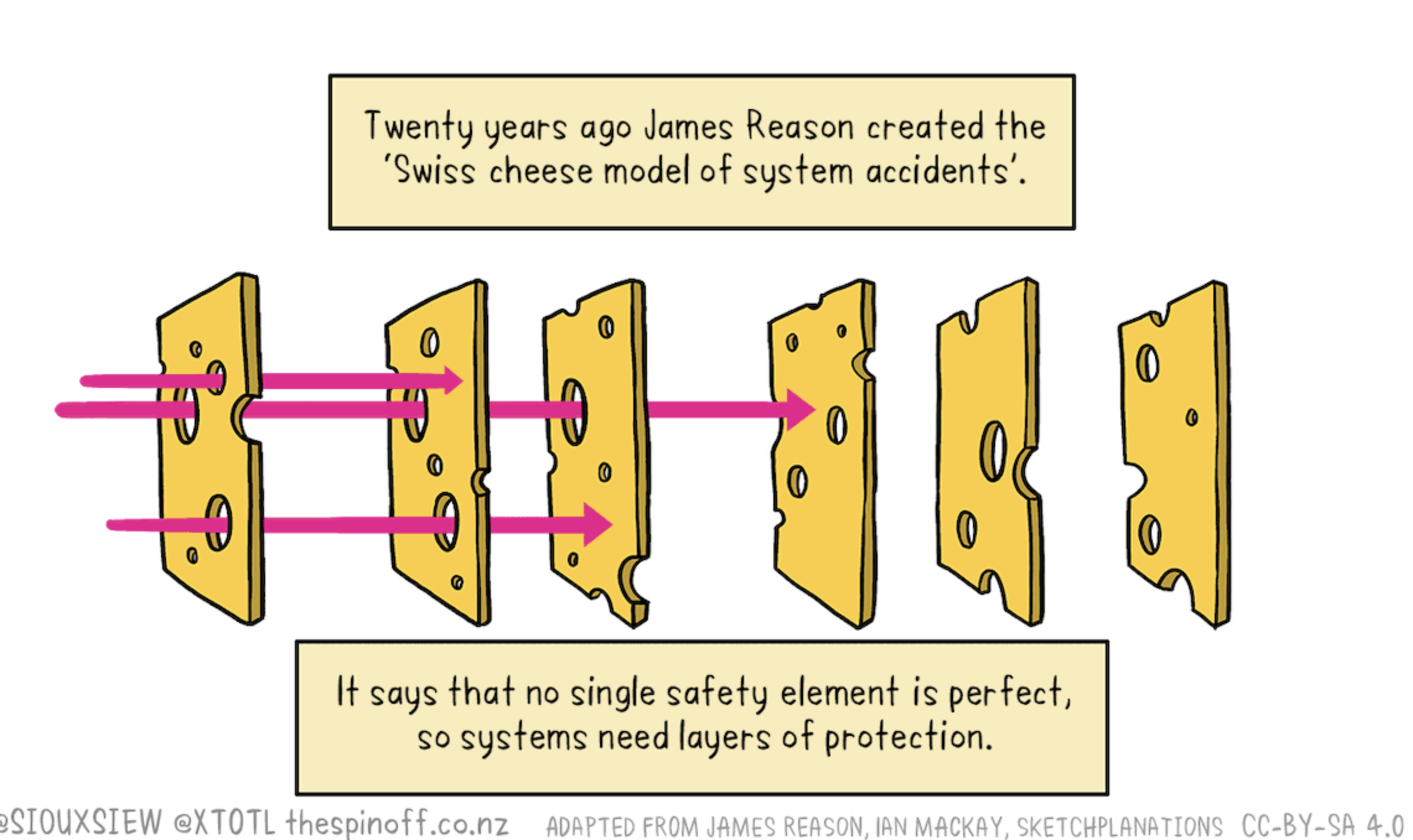

At the end of the day, Solidity smart contracts are just a piece of software. Many protocols rely only on unit tests, or they just have a one large test suite focused on fork testing. But I feel that's not sufficient. The number of attacks on the ecosystem doesn't seem to reduce. Each testing method serves a unique purpose, helping developers uncover vulnerabilities in specific parts of the code. For example, fuzz tests are great for finding edge cases in simple mathematical functions, while symbolic testing shines in complex calculation scenarios.

It's crucial to recognize that not all testing methods fit every project. For instance, invariant tests might be challenging to implement when building on top of other protocols.

Getting good at testing:

Alright, let's talk about getting good at testing in Solidity, kind of like learning to ride a bike. You cannot master it overnight. And this is not a zero-to-hero guide. You just have to keep riding, falling off, and then getting back on again. Testing is the same. You write a test, it goes all wonky, and then you fix it. It's all part of the game.

Think of your code like a bunch of Lego blocks. Sometimes you think you’ve built the coolest spaceship, but then you notice it's missing a door or a wheel. That's what bugs in code are like. They're those missing pieces that you only spot when you test. And guess what? Everyone misses a piece now and then. Even the best of us!

Heads up!

This guide isn't your typical, super-serious, polished-to-perfection kind of thing. It actually started as a bunch of notes I jotted down for myself. It’s more like a casual chat over coffee, sharing what I've learned and what others have shared over time.

We’re going to take this nice and easy. No rush. Testing in Solidity, or any coding really, should be fun, not a headache.

Ok. Now make yourself a cup of tea and come back. Let's start with the B A S I C S.

Basic Testing

Basic tests are the crucial to have tests for all contracts. These tests should go hand in hand with feature development. By including these tests early, you can identify and address issues promptly, saving time and effort in the long run.

Types:

-

Unit Tests: These are the most fundamental type of tests where you check individual functions or components in isolation. They are quick to run and help in identifying the smallest of issues which might be overlooked otherwise.

-

Integration Tests: These tests check how different parts of your application work together. They are crucial for ensuring that the combination of various components or functions in your codebase interact as expected.

-

Fork Tests: Fork testing involves creating a fork of the network and then deploying your contracts to test in an environment that closely mimics the on-chain network. This helps in understanding how the contracts will behave under real-world conditions.

-

Fuzz Tests: In fuzz testing, you input random, invalid, or unexpected data to your contracts and observe how they handle such inputs. This type of testing is excellent for discovering vulnerabilities and ensuring your contracts can handle unexpected or incorrect inputs gracefully.

Remember, each type of test serves a unique purpose and contributes to building robust and secure core. These tests can help uncover approximately 90% of potential issues in your code if implemented properly.

Note: I'll be using Foundry for demonstrating the testing strategies, but you can apply them irrespective of the framework.

Unit tests

Unit testing is the simplest form of testing. As the name suggests, each unit test should just test one thing at a time. It involves testing the smallest parts of your code – often individual functions – to ensure they work as expected.

Key Characteristics:

- Isolation: Should focus on a single functionality.

- Speed: Should run quickly to facilitate rapid iterations.

- Independence: Must not rely on external systems or states.

For example, let's implement unit tests for a simple SetterGetter contract.

contract SetterGetter {

uint256 public number;

function setNumber(uint256 newNumber) public {

number = newNumber;

}

function getNumber() public view returns (uint256 _number) {

_number = number;

}

}

You can see that there are only 2 key methods available in the above contract.

- Setting value to the

number. - Retrieving the value stored.

Unit testing the setNumber() method:

function test_setNumber() public {

getterSetter.setNumber(10);

assertEq(getterSetter.number(), 10);

}

As mentioned earlier, the above function tests only one functionality: setNumber(). Note that in the assertion getterSetter.number() is used for validation and not getterSetter.getNumber(). Even though it doesn't make a big difference, we are avoiding the assumption that the user defined getNumber() method returns the actual value stored in the state number. Fewer assumptions help us implement more reliable tests!!

💡 Random Tip:

Solidity compiler includes a getter method for all the public variables (for simple types like

uint,bytes32,address, etc.). So if you need to reduce your contract's bytecode size, you can change the variables' scope tointernalorprivateand expense only the required values via a getter. You can read more about this here.

So it's always a good practice to test the actual state change by reading it directly. By doing so, we are trusting the Solidity's auto-generated getter method rather than the user-defined one. When writing tests, the developer should think like an attacker to figure out what could go wrong with the given function. It's the most difficult part in writing tests: identifying edge cases. This is where some techniques like BTT comes into picture, which we'll cover as a separate chapter.

If possible, protocols should avoid asking the developer(s) responsible for developing the feature to test it.

Do not over test!

When writing tests, it's easy to go beyond the boundaries and start over testing the functions. By over-testing, I mean, writing tests that adds very little to no value at all. Tests should be meaningful.

One example would be to pass a value greater than what uint256 can hold and make sure it fails:

- Passing an invalid type as input (string, address, etc.) to make sure it fails.

function testFail_setNumber() public {

cut.setNumber(type(uint256).max + 1);

}

We already know that Solidity provides overflow protection by default. The goal is to test the user logic, not the compiler. Therefore, it's better to avoid these kinds of tests.

Okay, now let's get back to our setNumber() unit test:

function test_setNumber() public {

getterSetter.setNumber(10);

assertEq(getterSetter.number(), 10);

}

Even though, this test works fine in our case, we're making another assumption here that the setNumber() actually updates the value. Consider the implementation of the setNumber() method as follows:

uint256 public number = 10

function setNumber(uint256 value) public {}

The previous test works for this too. But is this a valid implementation? No.

So what do we do about this?

Good question. In order to avoid such scenarios, we need to make sure that the state change actually happens. To test a state change, the best way is to validate the before and after value of the state. So the test would become something like:

function test_setNumber() public {

uint256 numberBefore = getterSetter.number();

getterSetter.setNumber(10);

uint256 numberAfter = getterSetter.number();

assertEq(numberBefore, 0);

assertEq(numberAfter, 10);

}

The scenario explained here is quite simple, but it could be more useful if you apply such testing techniques in real-world applications, for example, transfer() method of the ERC20 spec, should reduce the sender's balance while increasing the recipient's balance. But most protocols don't make this explicit check in their deposit() method where token transfer takes place. They only check for the recipient's balance after transfer. The more robust check would be to check before and after balances of both the sender and the recipient to avoid the assumption that the underlying token actually follows the ERC20 spec and is not malicious.

Implementing test for getNumber() method:

For the getter method, the test would be straightforward.

Simpler version (more assumptions):

function test_getNumber_Simple() public {

getterSetter.setNumber(10);

assertEq(getterSetter.getNumber(), 10);

}

Robust version (less assumptions):

function test_getNumber_Robust() public {

getterSetter.setNumber(322e26);

assertEq(getterSetter.getNumber(), 322e26);

assertEq(getterSetter.getNumber(), getterSetter.number());

getterSetter.setNumber(0);

assertEq(getterSetter.getNumber(), 0);

assertEq(getterSetter.getNumber(), getterSetter.number());

}

I'll leave it to the readers to examine how the latter test is quite stronger than the former.

All the code snippets in this guide are available on the GitHub for your reference.

Mocking:

In some cases, you might need to mock certain calls to unit test the functions. For ex, consider a deposit() function in which some ERC20 tokens are transferred to a Vault contract. Instead of deploying a mock erc20 contract and trying to perform an actual transferFrom() call, you can use vm.mockCall() cheatcode (from Foundry) and make the transferFrom() call to return true so that you can go ahead and test the actual logic ignoring the nuances of setting up a token contract. This facilitates the testing of the contract's logic in isolation, bypassing the complexities associated with setting up and interacting with other contracts.

deposit() method:

function deposit(uint256 _amount) external {

require(token.transferFrom(msg.sender, address(this), _amount), "Transfer failed");

balances[msg.sender] += _amount;

}

Unit test:

// Vault.t.sol

contract VaultTest is Test {

...

address tokenA = makeAddr("TokenA");

...

function test_deposit() external {

vm.mockCall(address(tokenA), abi.encodeWithSelector(IERC20.transferFrom.selector), abi.encode(true));

vault.deposit(10);

assert(vault.balances(address(this))== 10);

}

}

This approach enables focused testing on the contract in question, allowing for a more efficient and targeted validation of its logic and behavior. For comprehensive testing that involves the entire transaction flow and interaction between multiple contracts, integration tests should be implemented.

Integration tests

Unit testing is a vital step in ensuring each individual contract works as expected. However, protocols often involve several contracts working together. It's crucial to check that these contracts interact correctly, which is where integration testing becomes essential. The goal of the integration test should be to ensure that our contracts work together as expected, without focusing on the behavior of external contracts.

Points to note:

- It is essential to simulate the actual deployment environment as closely as possible, which means using real contract deployments instead of mocks. This ensures the tests reflect real-world operation and interactions.

- Integration tests should concentrate on the interaction between contracts rather than repeating validations of internal logic covered by unit tests. This approach keeps tests focused and avoids redundancy.

- Typically, integration tests follow unit tests in the development cycle. Once individual components are verified to work as expected, integration tests assess the system's overall functionality.

Consider a "Governance" contract that manages a Vault contract that manages deposits and withdrawals of ERC20 tokens. To ensure the governance and vault contracts operate without breaking, proper integration tests should be implemented. This confirms that the protocol functions properly as a whole, not just in isolation.

Example:

Below is a simple example for illustrating the integration test. There is a Governance contract that sets a value in the Vault contract.

contract Governance {

address public owner;

mapping(address vault => uint256 rewardRate) rewardRates;

constructor() {

owner = msg.sender;

}

modifier onlyOwner() {

require(msg.sender == owner, "Only the owner can perform this action");

_;

}

function setRewardRate(address _vaultAddress, uint256 _rewardRate) public onlyOwner {

rewardRates[_vaultAddress] = _rewardRate;

IVault(_vaultAddress).setRewardRate(_rewardRate);

}

}

Vault contract:

contract Vault {

...

function setRewardRate(uint256 _rewardRate) public onlyGovernance {

rewardRate = _rewardRate;

}

...

}

To ensure integration tests are effective and reflect real-world scenarios, it's important to set up the testing environment accurately. This means using actual contract deployments rather than mock addresses or simplified versions. The goal is to closely mimic how these contracts would interact in a live setting rather than using mocks.

So the integration test for the above contract would look something like:

contract GovernanceIntegrationTest is Test {

Vault vault;

Governance governance;

function setUp() public {

governance = new Governance();

vault = new Vault(address(governance));

}

function testGovernanceUpdatesRewardRate() public {

uint256 newRewardRate = 100;

governance.setRewardRate(address(vault), newRewardRate);

assertEq(vault.rewardRate(), newRewardRate, "Vault's rewardRate should be updated to 100");

}

}

The above test validates that the reward rate in the vault contract has been successfully updated by the governance contract. You can also notice that we're not validating if the rewardRates mapping is updated with the reward rate as it should be a unit test.

💡 Random Tip:

To test the functions with external call to other contracts, you can follow the mocking technique discussed in the Unit test chapter.

Key takeaways:

- Integration test should come after unit tests.

- All contracts should be properly setup, avoiding mock contracts.

- Should not repeat the validations performed in the unit tests.

Fork tests

Fork tests are very similar to Integration tests. Fork tests ensure that our contracts works together as expected but in a live environment without or less mocking. This helps us mimic the behavior of the smart contracts post deployment, helping us catch any unexpected behavior.

While mocks can help you test basic interactions quickly, they often don’t capture real-world behavior, meaning critical bugs can slip through unnoticed. This is where fork tests come in.

In this chapter, we'll walk through a simple example of how fork tests can be helpful. For this example, we can consider a simple LiquidityAdder contract that has a function to add liquidity.

contract LiquidityAdder {

IUniswapV2Router02 public uniswapRouter;

constructor(address _uniswapRouter) {

uniswapRouter = IUniswapV2Router02(_uniswapRouter);

}

function addLiquidity(

address tokenA,

address tokenB,

uint amountADesired,

uint amountBDesired

) external returns (uint amountA, uint amountB, uint liquidity) {

IERC20(tokenA).transferFrom(msg.sender, address(this), amountADesired);

IERC20(tokenB).transferFrom(msg.sender, address(this), amountBDesired);

IERC20(tokenA).approve(address(uniswapRouter), amountADesired);

IERC20(tokenB).approve(address(uniswapRouter), amountBDesired);

return uniswapRouter.addLiquidity(

tokenA,

tokenB,

amountADesired,

amountBDesired,

0,

0,

msg.sender,

block.timestamp

);

}

}

The function addLiquidity() in the above contract just pulls the tokens from the user and adds liquidity to the uniswap v2 pool. Let's add a unit test for the above method.

contract LiquidityAdderTest is Test {

LiquidityAdder liquidityAdder;

address constant UNISWAP_ROUTER = address(0xdeadbeef);

function setUp() public {

liquidityAdder = new LiquidityAdder(UNISWAP_ROUTER);

}

function testAddLiquidityMock() public {

address tokenA = address(0x1);

address tokenB = address(0x2);

// Mock token transfers

vm.mockCall(

tokenA,

abi.encodeWithSelector(IERC20.transferFrom.selector),

abi.encode(true)

);

vm.mockCall(

tokenB,

abi.encodeWithSelector(IERC20.transferFrom.selector),

abi.encode(true)

);

// Mock the addLiquidity function call

vm.mockCall(

UNISWAP_ROUTER,

abi.encodeWithSelector(IUniswapV2Router02.addLiquidity.selector),

abi.encode(1000, 1000, 1000)

);

(uint amountA, uint amountB, uint liquidity) = liquidityAdder.addLiquidity(tokenA, tokenB, 1000, 1000);

assertEq(amountA, 1000);

assertEq(amountB, 1000);

assertEq(liquidity, 1000);

}

}

When you run the above test it passes. Voila! But does it actually validate that the logic works onchain after deploying the contracts? To make sure it works, let's implement a fork test with real mainnet address without any mocks.

contract LiquidityAdderForkTest is Test {

LiquidityAdder liquidityAdder;

address constant UNISWAP_ROUTER = 0x7a250d5630B4cF539739dF2C5dAcb4c659F2488D;

address constant USDC = 0xA0b86991c6218b36c1d19D4a2e9Eb0cE3606eB48;

address constant WETH = 0xC02aaA39b223FE8D0A0e5C4F27eAD9083C756Cc2;

address constant TOKEN_WHALE = 0x8EB8a3b98659Cce290402893d0123abb75E3ab28;

function setUp() public {

// Fork Ethereum mainnet

vm.createSelectFork("https://rpc.flashbots.net");

liquidityAdder = new LiquidityAdder(UNISWAP_ROUTER);

}

function testAddLiquidityFork() public {

vm.startPrank(TOKEN_WHALE);

IERC20(USDC).approve(address(liquidityAdder), 1000e6);

IERC20(WETH).approve(address(liquidityAdder), 1 ether);

(uint amountA, uint amountB, uint liquidity) = liquidityAdder.addLiquidity(USDC, WETH, 1000e6, 1 ether);

}

When you run the above test, you can see it fails with the following error:

│ │ ├─ [8384] 0xA0b86991c6218b36c1d19D4a2e9Eb0cE3606eB48::transferFrom(LiquidityAdder: [0x5615dEB798BB3E4dFa0139dFa1b3D433Cc23b72f], 0xB4e16d0168e52d35CaCD2c6185b44281Ec28C9Dc, 1000000000 [1e9])

│ │ │ ├─ [7573] 0x43506849D7C04F9138D1A2050bbF3A0c054402dd::transferFrom(LiquidityAdder: [0x5615dEB798BB3E4dFa0139dFa1b3D433Cc23b72f], 0xB4e16d0168e52d35CaCD2c6185b44281Ec28C9Dc, 1000000000 [1e9]) [delegatecall]

│ │ │ │ └─ ← [Revert] revert: ERC20: transfer amount exceeds allowance

│ │ │ └─ ← [Revert] revert: ERC20: transfer amount exceeds allowance

│ │ └─ ← [Revert] revert: TransferHelper: TRANSFER_FROM_FAILED

│ └─ ← [Revert] revert: TransferHelper: TRANSFER_FROM_FAILED

└─ ← [Revert] revert: TransferHelper: TRANSFER_FROM_FAILED

You might be surprised to find that it fails! The error message indicates that the Uniswap router reverted the transaction. What happened?

The issue is in our LiquidityAdder contract. We're transferring tokens to the contract itself, but we never approved the Uniswap router to spend these tokens on behalf of the contract. The mock test didn't catch this because we mocked all the calls, but the fork test revealed the bug. We can see how fork tests can be useful even if we have unit/integration tests in place.

Some foundry tips for fork tests:

createSelectFork()cheatcode helps you to create and make the fork active.createFork()just helps you to create forks. Both the cheatcodes return aforkId.- Use

selectFork(forkId)to switch between chains during your fork tests. Remember to usevm.makePersistent()cheatcode to persist deployment across the selected forks. - To make the fork tests faster, pass the block number when creating the fork next to the URL param.

- Use tools like mesc to automatically fetch the RPC url in your tests. For example:

function fetchRpcUrlFromMesc(string memory networkName) internal returns (string memory url) {

string[] memory inputs = new string[](3);

inputs[0] = "mesc";

inputs[1] = "url";

inputs[2] = networkName;

bytes memory res = vm.ffi(inputs);

url = string(res);

}

function setUp() public {

string memory network = "avax-c-chain";

uint256 cchainForkId = vm.createSelectFork(fetchRpcUrlFromMesc(network), 41022344);

network = "eth-mainnet";

uint256 mainnetForkId = vm.createSelectFork(fetchRpcUrlFromMesc(network), 19120056);

// Do something

}

[!TIP] You can find more foundry related tips and techniques in my blog post here.

Do we even need integration tests?

Now you might ask since mocking is dangerous and error-prone do we even need integration tests? The answer is it depends. The example we saw is quite basic. For complex protocol, single function might interact with multiple different contracts (both internal and external). In such cases, integration tests help us carefully curate tests to identify edge cases in different interactions. Also integration tests are fast. So yes, in most cases integration tests provides value.

For some protocols, integration tests might not be the most effective approach, especially when dealing with a single contract that primarily interacts with external protocols. On the other hand, for protocols that don't rely on external contract calls, fork tests may not add much value.

Therefore, it's important to tailor the test suite to the specific needs of the protocol, by focusing on what makes sense for each scenario. It provides a much higher level of confidence before deploying your contracts to mainnet.

Recap:

So far we've looked into unit tests, integration tests and fork tests. Each method is all useful in its own aspect. When implemented correctly most bugs can be found with these tests. By having these three types of tests are sufficient enough to make the test suite strong enough against attacks for very basic contracts that doesn't do any crazy stuff.

Fuzz tests

As mentioned in the previous section, unit, integration and fork tests are sufficient enough for most protocols to get a decent enough test suite that helps find most of the low hanging bugs. However, there are times when they might not catch every possible bug, especially in complex smart contracts that has functions that involve heavy math.

While the basic tests can check the obvious scenarios, they might miss unexpected edge cases. What happens if someone inputs a number that's way larger than you anticipated? Or a negative number when only positives make sense?

This is where fuzz tests come in handy. Fuzz testing involves bombarding your functions with a wide range of random, unexpected inputs to see how they react. It's like throwing everything but the kitchen sink at your code to ensure it can handle anything that comes its way.

Types:

There are 2 types of fuzzing.

- Stateful

- Stateless

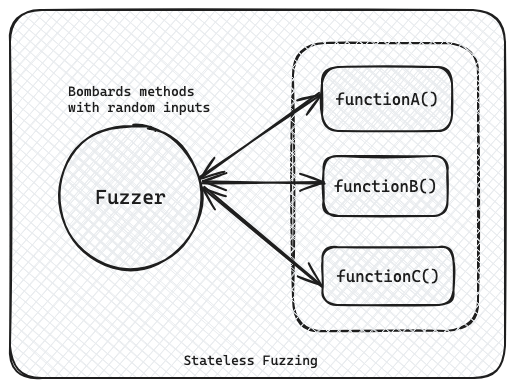

Stateless tests are the basic ones. They don't keep track of the state or the sequence of the calls, so they're fast.

Stateful fuzzing are also called invariant tests as they make sure the defined invariant holds despite calling multiple methods in random sequence several times. We'll look into Invariant tests in the next chapter. Currently we focus on Stateless fuzz tests.

Example:

Let's look into a simple example to demonstrate how to setup fuzz tests using Foundry and how it can be beneficial in finding hidden bugs.

Consider the following simplified lending protocol implementation:

contract SampleLending {

uint256 public constant FEE_PERCENTAGE = 1000; // 10%

address public feeReceiver;

constructor(address _feeReceiver) {

feeReceiver = _feeReceiver;

}

function calculateInterest(uint256 principal, uint256 rate, uint256 time) public pure returns (uint256 interest, uint256 fees) {

interest = (rate * principal * time) / 10000 / 365 days;

fees = (FEE_PERCENTAGE * interest) / 10000;

interest -= fees;

}

function repay(address token, uint256 principal, uint256 rate, uint256 time) external {

(uint256 interest, uint256 fees) = calculateInterest(principal, rate, time);

IERC20(token).transferFrom(msg.sender, feeReceiver, fees);

}

}

contract MockToken {

mapping(address => uint256) private _balances;

mapping(address => mapping(address => uint256)) private _allowances;

function mint(address account, uint256 amount) external {

_balances[account] += amount;

}

function transferFrom(address sender, address recipient, uint256 amount) external returns (bool) {

require(amount > 0, "Cannot transfer zero tokens");

require(_balances[sender] >= amount, "Insufficient balance");

require(_allowances[sender][msg.sender] >= amount, "Insufficient allowance");

_balances[sender] -= amount;

_balances[recipient] += amount;

_allowances[sender][msg.sender] -= amount;

return true;

}

}

This contract calculates interest and fees for a loan and facilitates repayment. At first glance, it appears to be a straightforward implementation. Let's write some tests to validate the logic.

Unit Test:

Unit test for this function would be something like this:

function testRepayment() public {

uint256 principal = 1000 ether;

uint256 rate = 1000; // 10% APR

uint256 time = 30 days;

(uint256 interest, uint256 fees) = protocol.calculateInterest(principal, rate, time);

assertGt(fees, 0, "Fees should be greater than zero");

vm.startPrank(address(this));

token.mint(address(this), fees);

token.approve(address(protocol), type(uint256).max);

protocol.repay(address(token), principal, rate, time);

vm.stopPrank();

}

This test passes successfully, giving us a false sense of security. It verifies that the contract works as expected for a specific, "happy path" scenario.

Adding the fuzz test:

Now, let's consider a fuzz test for the same contract:

function testFuzz_Repayment(uint256 principal, uint256 rate, uint256 time) public {

vm.assume(principal > 0 && principal <= 1e36); // Max 1 billion tokens with 18 decimals

vm.assume(rate >= 10 && rate <= 100000); // 0.1% to 1000% APR

vm.assume(time >= 100 && time <= 365 days);

(uint256 interest, uint256 fees) = protocol.calculateInterest(principal, rate, time);

vm.startPrank(address(this));

token.mint(address(this), fees);

token.approve(address(protocol), type(uint256).max);

protocol.repay(address(token), principal, rate, time);

vm.stopPrank();

}

This fuzz test generates random values for principal, rate, and time within reasonable bounds. By doing so, we can use a vast range of possible inputs, helping us identify edge cases.

Running the fuzz test reveals an important issue: the contract fails when the calculated fees/interests are zero. The output would be something like this:

[FAIL. Reason: revert: Cannot transfer zero tokens; counterexample: calldata=0x92d09fa000000000000000000000000000000000000000000000000000000000000003b1000000000000000000000000000000000000000000000000000000000000028f0000000000000000000000000000000000000000000000000000000000001613 args=[945, 655, 5651]] testFuzz_Repayment(uint256,uint256,uint256) (runs: 0, μ: 0, ~: 0)

Logs:

Principal: 945

Rate: 655

Time: 5651

Fees: 0

Interest: 0

This occurs because some ERC20 token implementations (similar to our MockToken) revert on zero-value transfers (like fee-on-transfer tokens), a behavior our contract doesn't account for.

The root of the problem lies in the repay function:

function repay(address token, uint256 principal, uint256 rate, uint256 time) external {

(uint256 interest, uint256 fees) = calculateInterest(principal, rate, time);

IERC20(token).transferFrom(msg.sender, feeReceiver, fees);

}

This function unconditionally attempts to transfer fees, even when they amount to zero. While this works fine with many ERC20 implementations, it fails with tokens that explicitly disallow zero-value transfers.

Implementing the Fix

To resolve this issue, we need to add a check before attempting the fee transfer:

function repay(address token, uint256 principal, uint256 rate, uint256 time) external {

(uint256 interest, uint256 fees) = calculateInterest(principal, rate, time);

if (fees > 0) {

IERC20(token).transferFrom(msg.sender, feeReceiver, fees);

}

}

This simple check ensures that we only attempt to transfer fees when they are non-zero, thereby avoiding potential reverts with certain ERC20 implementations.

Tuning the fuzz tests:

You can notice the test uses multiple vm.assume() cheatcodes. It is a feature provided by foundry to constrain inputs to realistic ranges.

- Prevents overflow: By limiting

amountto 1e36 (1 billion ETH), we avoid overflow in most cases. - Realistic scenarios: The bounds ensure we're testing with values that could occur in the real world.

- Focused testing: We ensure we're testing the full range of relevant inputs, including edge cases.

- Efficiency: Every test run uses meaningful inputs, making better use of the testing time.

When we don't properly tune inputs for fuzz testing, false positives become more likely, as tests might often fail due to unrelated issues like overflows rather than the actual bug we're looking for. Important bugs can be missed if edge cases, such as small values or unusual rates, aren't adequately covered. Also untuned fuzz tests often waste CPU resources on unrealistic scenarios, making the process inefficient.

In conclusion, tuning inputs in fuzz testing is crucial for:

- Ensuring realistic and meaningful test scenarios

- Efficiently covering the input space, including edge cases

- Avoiding false positives due to overflow or other irrelevant issues

- Making the best use of limited testing resources

By carefully constraining our inputs using bound or assume, we can create more effective fuzz tests that are better at catching subtle bugs while avoiding wasted cycles on unrealistic scenarios.

The above example illustrates the value of adding fuzz testing in the test suite. While the unit test gave us a false sense of security, the fuzz test uncovered a subtle yet important bug that was hiding in the plain sight.

[!TIP] Key Takeaways :

- Uncovering edge cases: By exploring a wide range of inputs, fuzz tests can reveal issues that occur only under specific, often unexpected conditions.

- Improving code robustness: Addressing issues found by fuzz tests often leads to more resilient and flexible code.

- Complementing unit tests: While unit tests verify specific scenarios, fuzz tests provide a broader coverage of possible inputs and states.

- Tuning: The fuzz tests are more likely to catch the edge cases when the parameter ranges are tuned properly.

As smart contract developers, we must embrace fuzz testing as an integral part of our testing strategy. It serves as a powerful tool to enhance the security and reliability of our contracts.

Useful resources:

Advanced Testing

In the previous chapters we have looked into the basic tests that every project has to implement to build a stronger test suite. Once those tests are implemented, it's time to move on to advanced testing which helps to further boost the confidence before going live. Advanced tests help make sure your contracts are really strong and can handle more complicated situations. These tests look deeper into how your contracts work together, handle different scenarios, and stay reliable. By using advanced testing methods, you can find hidden problems that the basic tests might have missed.

However, it's important to plan these advanced tests carefully. Implementing them can take a lot of time, especially depending on your project's timeline and resources. Finding the right balance between testing and development is crucial. Not every advanced test type is necessary for every project. Depending on what your project focuses on, some tests will be more important than others. So its crucial to set the right priorities.

For example:

- End-to-End (E2E) Tests can be crucial for bridge/layer-2 protocols because they ensure that all parts of the system work together seamlessly.

- Invariant Tests can be especially important for DeFi protocols.

- Differential Tests could be more useful for math-heavy projects where precise calculations are essential.

Types:

-

Invariant Tests: These tests helps ensure that certain important rules always stay true no matter what happens in your system. For example, making sure that the total supply of tokens never changes unexpectedly.

-

Differential Tests: Differential testing cross-references multiple implementations of the same function by comparing each one’s output.

-

Lifecycle Tests: These tests follow your contracts through all their stages, from when they are first created to when they are updated or closed. They make sure everything works as expected at each step.

-

Scenario Tests: Scenario testing uses real-life situations to see how your contracts handle them. By simulating what might happen in the real world, we can ensure the system behaves as expected.

-

End-to-End (E2E) Tests: As the name suggests, the E2E tests check the whole system end to end. They make sure all components of the system work together correctly, giving the confidence that everything functions as it should when everything is connected.

-

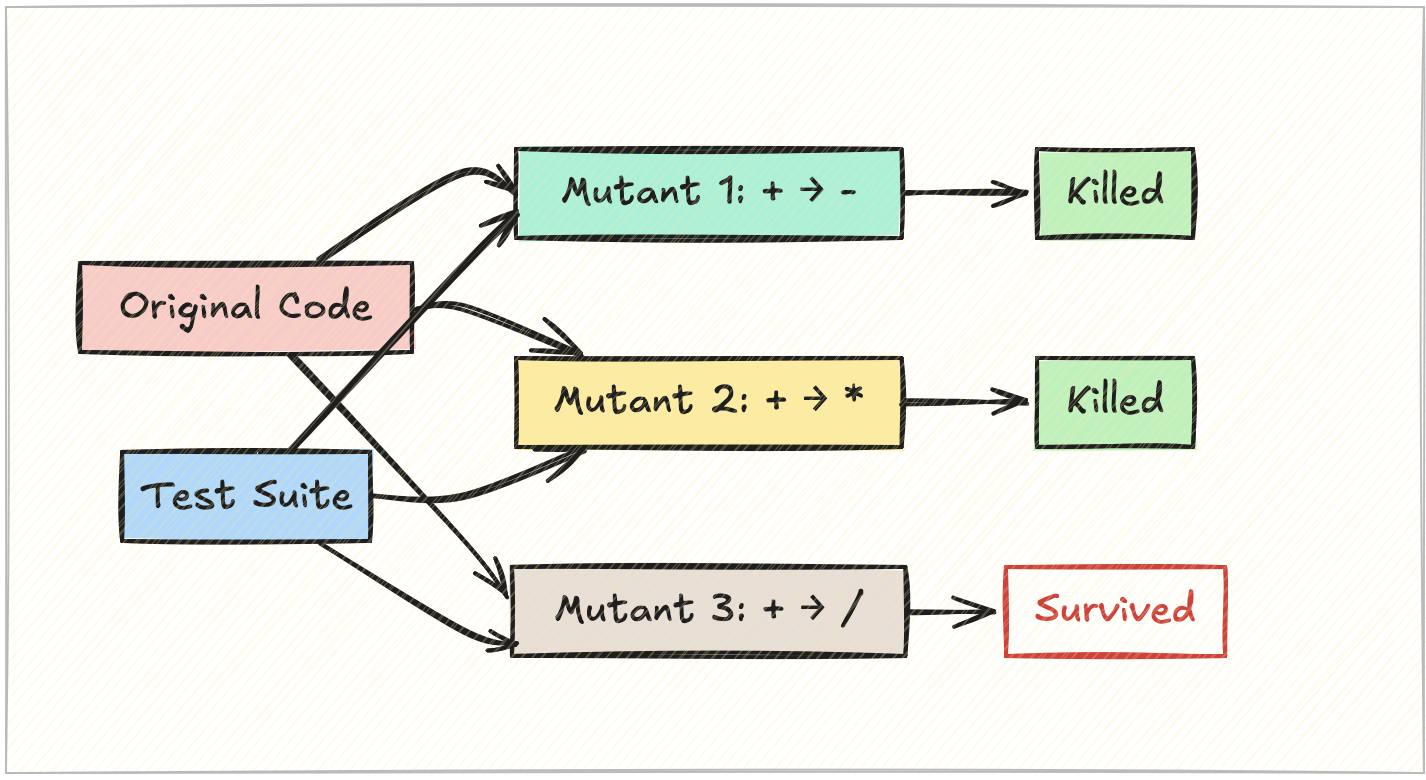

Mutation Tests: Mutation testing makes small changes to the contracts on purpose to see if our tests can catch them. This helps you check if our tests are strong enough to find mistakes.

Each of these advanced tests adds another layer of protection, helping to catch issues that basic tests might have missed. Although using these advanced testing methods can be powerful and add those additional points before audit, we should be aware that these tests should come only after the basic tests are implemented properly for the system with atleast 95% coverage.

Note: While this guide uses Foundry to show advanced testing methods, you can use these techniques with other testing tools too.

Remember: Not all advanced tests are needed for every project. Choose the ones that best fit your project's goals and complexity. Planning your testing strategy wisely will help you use your time and resources effectively, ensuring that your contracts are both robust without affecting the time to market.

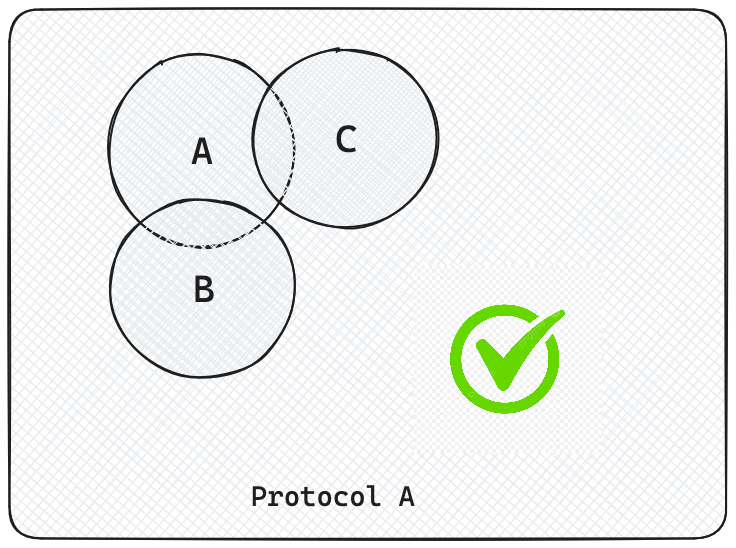

Invariant Testing

We just saw what fuzz tests are and how it can be useful. We mentioned that fuzz tests are "stateless", which means that it can test function in isolation. Invariant tests, on the other hand, are "stateful". It aims to verify that the entire system behaves correctly under specified conditions and properties that are supposed to always hold true. It ensures that the state of the contract remains consistent and aligned with its expected properties, irrespective of the sequence of operations performed. An invariant is something that must always be true about the system, no matter how it is used. For example, the sum of all token balances in a liquidity pool might always need to equal the pool’s reserves.

Invariant tests are not limited to testing isolated contract methods but rather observes how different functions interact with each other over time, ensuring that the core requirements of the protocol are respected under all circumstances. It’s particularly powerful in the context of DeFi protocols, where interactions between different methods and contracts must consistently respect system-wide invariants.

Fuzzing vs. Invariant Testing

While both fuzzing and invariant testing are valuable tools in a developer's arsenal, they serve different purposes and have distinct characteristics:

Fuzzing

Fuzzing is a targeted approach to testing individual functions or methods:

- It focuses on a specific method of a contract, calling it multiple times with randomized inputs.

- The goal is to find edge cases or unexpected behaviors within a single function.

- Fuzzing is more "surgical" in nature, diving deep into the behavior of individual components.

Example of a fuzz test:

function testFuzz_Deposit(uint256 amount) public {

vm.assume(amount > 0 && amount <= token.balanceOf(user));

vm.prank(user);

pool.deposit(amount);

assertEq(pool.balanceOf(user), amount);

}

Invariant Testing

Invariant testing, on the other hand, takes a holistic approach:

- It verifies that certain properties (invariants) of the system remain true under all possible sequences of operations.

- Invariant tests can call multiple functions in random order with random inputs.

- The focus is on maintaining system-wide consistency rather than the behavior of individual functions.

Example of an invariant:

function invariant_totalSupplyEqualsSumOfBalances() public {

uint256 totalSupply = token.totalSupply();

uint256 sumOfBalances = 0;

for (uint256 i = 0; i < users.length; i++) {

sumOfBalances += token.balanceOf(users[i]);

}

assertEq(totalSupply, sumOfBalances);

}

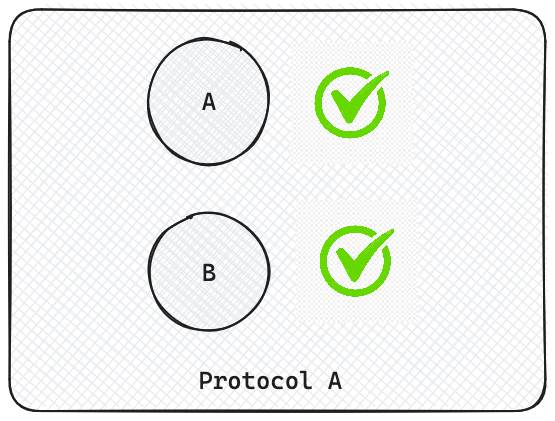

Types of Invariant Testing

Open Invariant Testing

Open invariant testing is the unrestricted form of invariant testing:

- All public and external functions of the contract under test are exposed to the fuzzer.

- The fuzzer can call any function with any arguments in any order.

- This approach can find complex bugs that arise from unexpected interactions between different parts of the system.

- However, it may also generate many unrealistic scenarios that wouldn't occur in real scenarios.

Example setup:

contract OpenInvariantTest is Test {

LendingPool pool;

function setUp() public {

pool = new LendingPool();

targetContract(address(pool));

}

function invariant_totalBorrowsLessThanTotalDeposits() public {

assert(pool.totalBorrows() <= pool.totalDeposits());

}

}

Constrained Invariant Testing

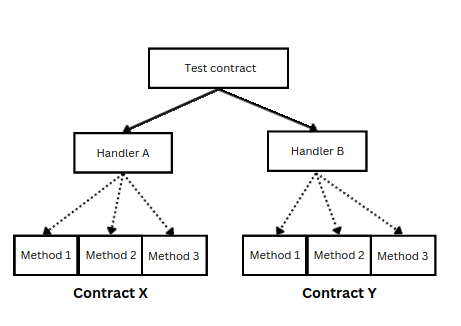

Constrained invariant testing uses the handler pattern to restrict the fuzzer's actions:

- Custom handler contracts define a set of actions that the fuzzer can perform.

- This allows for more realistic test scenarios that better reflect actual usage patterns.

- Handlers can incorporate preconditions and bounds on inputs to prevent unrealistic states.

- While more constrained, this approach can still uncover subtle bugs that might be missed by more targeted tests.

Example of a handler for constrained invariant testing:

contract LendingPoolHandler {

LendingPool pool;

address[10] users;

constructor(LendingPool _pool, address[10] memory _users) {

pool = _pool;

users = _users;

}

function deposit(uint256 amount, uint256 userIndex) public {

amount = bound(amount, 1, 1000 ether);

userIndex = bound(userIndex, 1, 10);

address user = users[userIndex];

pool.deposit{value: amount}(user);

}

function borrow(uint256 amount, uint256 userIndex) public {

amount = bound(amount, 1, 100 ether);

userIndex = bound(userIndex, 1, 10);

address user = users[userIndex];

pool.borrow(amount, user);

}

}

In the above contract you can notice that the handler is exposed to the fuzzer rather than the contract under test. This gives us more precise control over the tests. The handlers can also implement bounds if necessary but its not mandatory. Usually its better to start with bounded tests then slowly transition towards unbounded tests depending on the requirements.

Example:

Okay, now let's look into a practical example where invariant tests can be useful.

We have a simple lending protocol implemented in Solidity below:

contract LendingProtocol {

mapping(address => uint256) public deposits;

mapping(address => uint256) public borrows;

IERC20 public token;

uint256 public constant COLLATERAL_FACTOR = 80; // 80% collateral factor

uint256 public totalDeposits;

uint256 public totalBorrows;

constructor(address _token) {

token = IERC20(_token);

}

function deposit(uint256 amount) external {

require(token.transferFrom(msg.sender, address(this), amount), "Transfer failed");

deposits[msg.sender] += amount;

totalDeposits += amount;

}

function borrow(uint256 amount) external {

uint256 maxBorrow = (deposits[msg.sender] * COLLATERAL_FACTOR) / 100;

require(borrows[msg.sender] + amount <= maxBorrow, "Exceeds borrow limit");

borrows[msg.sender] += amount;

totalBorrows += amount;

require(token.transfer(msg.sender, amount), "Transfer failed");

}

function repay(uint256 amount) external {

require(token.transferFrom(msg.sender, address(this), amount), "Transfer failed");

uint256 actualRepayment = amount > borrows[msg.sender] ? borrows[msg.sender] : amount;

borrows[msg.sender] -= actualRepayment;

totalBorrows -= actualRepayment;

if (amount > actualRepayment) {

uint256 excess = amount - actualRepayment;

deposits[msg.sender] += excess;

totalDeposits += excess;

}

}

function withdraw(uint256 amount) external {

require(deposits[msg.sender] > 0, "No deposits");

uint256 requiredCollateral = (borrows[msg.sender] * 100) / COLLATERAL_FACTOR;

require(deposits[msg.sender] >= requiredCollateral, "insufficient collateral");

uint256 availableToWithdraw = deposits[msg.sender] - requiredCollateral;

uint256 actualWithdrawal = amount > availableToWithdraw ? availableToWithdraw : amount;

require(actualWithdrawal > 0, "insufficient funds");

deposits[msg.sender] -= actualWithdrawal;

totalDeposits -= actualWithdrawal;

require(token.transfer(msg.sender, actualWithdrawal), "Transfer failed");

}

}

The above contract allows users to deposit ERC20 tokens and borrow against their deposits. Key features include:

- Deposits: Users can deposit tokens, increasing their balance in the protocol.

- Borrowing: Users can borrow up to 80% (

COLLATERAL_FACTOR) of their deposited amount. - Repayment: Users can repay their loans, reducing their borrow balance.

- Withdrawal: Users can withdraw their deposits, but only if it doesn't leave them undercollateralized.

Defining Invariants

To ensure the protocol functions correctly, we define the following invariants:

- User Collateral Always Sufficient: A user's deposit should always cover their borrow according to the collateral factor.

- Total Deposits Greater Than Total Borrows: The total amount deposited in the protocol should always be greater than or equal to the total amount borrowed.

Here's the invariant test contract:

contract LendingProtocolInvariantTest is Test {

LendingProtocol public protocol;

MockERC20 public token;

Handler public handler;

function setUp() public {

token = new MockERC20("Test Token", "TEST");

protocol = new LendingProtocol(address(token));

handler = new Handler(address(protocol), address(token));

targetContract(address(handler));

}

function invariant_userCollateralAlwaysSufficient() public {

address[] memory users = handler.getUserList();

for (uint256 i = 0; i < users.length; i++) {

address user = users[i];

uint256 userDeposit = protocol.deposits(user);

uint256 userBorrow = protocol.borrows(user);

// If user has any borrows, they must maintain sufficient collateral

if (userBorrow > 0) {

uint256 requiredDeposit = (userBorrow * 100) / protocol.COLLATERAL_FACTOR();

assertGe(

userDeposit,

requiredDeposit,

"INVARIANT_INSUFFICIENT_COLLATERAL"

);

}

}

}

function invariant_totalDepositsGreaterThanBorrows() public {

assertGe(

protocol.totalDeposits(),

protocol.totalBorrows(),

"INVARIANT_DEPOSITS_GT_BORROW"

);

}

}

In the setup() function you can see that we use the targetContract() cheatcode to inform the fuzzer to only call the functions defined in the handler contract. If not, then all the contract created in the setup function will be fuzzed which will lead to waste of resources. Similarly you can also use the excludeContract() cheatcode according to your usecase.

Handler Contract and Actors

As explained earlier, using handler contract is useful in simulating the real world scenario of the users would interact with the contracts. In this case, users should deposit first before borrowing or withdrawing. Similarly, users should first borrow before trying to repay to make sure the tests mimick real world behaviour in the tests. Actors are the different addresses that interact with the system.

Foundry generates different address for each run during the invariant test. To make sure it's utilized in the tests, we should prank the calls with

msg.senderin the tests.

contract Handler is Test {

SimpleLendingProtocol public protocol;

MockERC20 public token;

mapping(address => bool) public actors;

address[] public actorList;

constructor(address _protocol, address _token) {

protocol = LendingProtocol(_protocol);

token = MockERC20(_token);

}

function deposit(uint256 amount) public {

amount = bound(amount, 1, 10e20);

token.mint(msg.sender, amount);

vm.startPrank(msg.sender);

token.approve(address(protocol), type(uint256).max);

protocol.deposit(amount);

vm.stopPrank();

if (!actorList[msg.sender]) {

actorList[msg.sender] = true;

actors.push(msg.sender);

}

}

function borrow(uint256 amount) public {

address actor = msg.sender;

uint256 maxBorrow = (protocol.deposits(actor) * protocol.COLLATERAL_FACTOR()) / 100;

if (maxBorrow == 0) return;

amount = bound(amount, 1, maxBorrow);

vm.startPrank(actor);

try protocol.borrow(amount) {

// Borrow succeeded

} catch {

// Ignore failed borrows

}

vm.stopPrank();

if (!actorList[actor]) {

actorList[actor] = true;

actors.push(actor);

}

}

function withdraw(uint256 amount) public {

address actor = msg.sender;

uint256 currentDeposit = protocol.deposits(actor);

if (currentDeposit == 0) return;

amount = bound(amount, 1, currentDeposit);

vm.prank(actor);

protocol.withdraw(amount);

if (!actors[actor]) {

actors[actor] = true;

actors.push(actor);

}

}

function repay(uint256 amount) public {

address actor = msg.sender;

uint256 currentBorrow = protocol.borrows(actor);

if (currentBorrow == 0) return;

amount = bound(amount, 1, currentBorrow);

token.mint(actor, amount);

vm.startPrank(actor);

token.approve(address(protocol), amount);

protocol.repay(amount);

vm.stopPrank();

if (!actorList[actor]) {

actorList[actor] = true;

actors.push(actor);

}

}

function getUserList() external view returns (address[] memory) {

return userList;

}

}

In the Handler contract, we maintain a list of users with the actors array and actorList mapping to track all the users who have interacted with the protocol. When a user performs an action (deposit, borrow, withdraw, repay), they are added to the list if they aren't already present. This allows the invariant tests to iterate over all users to verify that the invariants hold for every one.

This ensures that the system behaves as expected across multiple user interactions.

Based on our test setup, all the functions in the handler contract will be randomly called by the fuzzer. If you want to restrict the fuzzer to call specific functions you can do that as well. For example, if you want the fuzzer to ignore the repay() method, you can do so via the targetSelector() cheatcode.

bytes4[] memory selectors = new bytes4[](3);

selectors[0] = Handler.deposit.selector;

selectors[1] = Handler.withdraw.selector;

selectors[2] = Handler.borrow.selector;

targetSelector(FuzzSelector({

addr: address(handler),

selectors: selectors

}));

Running the Invariant Tests

Having defined the handlers and invariants, let's go ahead and run the invariant tests.

forge t --mc LendingProtocolInvariantTest -vv

We can see that the invariant test failed with the error : INVARIANT_INSUFFICIENT_COLLATERAL. This is critical as one of the core invariant has been violated.

[FAIL: <empty revert data>]

[Sequence]

sender=0x00000000000000000000002eA38b54cE5a819AF6 addr=[test/invariant/Invariant.t.sol:Handler]0xF62849F9A0B5Bf2913b396098F7c7019b51A820a calldata=deposit(uint256) args=[268086407878502856564320633721989845494868808503440654 [2.68e53]]

sender=0x00000000000000000000002eA38b54cE5a819AF6 addr=[test/invariant/Invariant.t.sol:Handler]0xF62849F9A0B5Bf2913b396098F7c7019b51A820a calldata=borrow(uint256) args=[1083181390655043523035 [1.083e21]

sender=0x0000000000000000000000000000000000657374 addr=[test/invariant/Invariant.t.sol:Handler]0xF62849F9A0B5Bf2913b396098F7c7019b51A820a calldata=deposit(uint256) args=[149284093665934295474410336178711275202335643493951105 [1.492e53]]

sender=0x00000000000000000000002eA38b54cE5a819AF6 addr=[test/invariant/Invariant.t.sol:Handler]0xF62849F9A0B5Bf2913b396098F7c7019b51A820a calldata=withdraw(uint256) args=[2442579456253310227425442841 [2.442e27]]

invariant_userCollateralAlwaysSufficient() (runs: 1, calls: 1, reverts: 1)

Logs:

Error: INVARIANT_INSUFFICIENT_COLLATERAL

Error: a >= b not satisfied [uint]

Value a: 262567983659900320649

Value b: 508481869510300963140

The invariant test invariant_userCollateralAlwaysSufficient() is designed to ensure that each user's deposit always meets or exceeds the required collateral based on their borrow. The test runs multiple random sequences of user interactions to check this condition.

In this case, the test failed, indicating that the invariant was violated. You can also see that the failure output provides a sequence of function calls that led to the violation.

From the above output, here's the sequence that triggered the bug:

- Deposit: A user deposits an amount.

- Borrow: The same user borrows an amount within their allowed limit.

- Deposit: Another deposit is made (could be by the same or a different user).

- Withdraw: The initial user withdraws an amount.

Let's break down this sequence with some example values to better understand what the error is:

-

User Deposits 500 ETH

- Deposits: 500 ETH

- Borrows: 0 ETH

- Collateral Factor: 80%

- Max Borrow: (500 * 80%) = 400 ETH

-

User Borrows 400 ETH

- Deposits: 500 ETH

- Borrows: 400 ETH

- Required Collateral: (400 * 100) / 80 = 500 ETH

- Available to Withdraw: 500 - 500 = 0 ETH

-

User Deposits an Additional 100 ETH

- Deposits: 600 ETH

- Borrows: 400 ETH

- Required Collateral: (400 * 100) / 80 = 500 ETH

- Available to Withdraw: 600 - 500 = 100 ETH

-

User Withdraws 200 ETH

- Attempting to Withdraw: 200 ETH

- Available to Withdraw: 100 ETH

- Issue: The

withdrawfunction allows withdrawal without properly checking if it leaves the user undercollateralized. - After Withdrawal:

- Deposits: 600 - 200 = 400 ETH

- Borrows: 400 ETH

- Required Collateral: (400 * 100) / 80 = 500 ETH

- Actual Collateral: 400 ETH

- Collateral Deficit: 500 - 400 = 100 ETH

The user was able to withdraw more than the available amount, leaving their collateral insufficient to cover their borrow.

The invariant test detected that the user's deposit (a = 262567983659900320649) was less than the required collateral (b = 508481869510300963140). This violates the invariant that the user's deposit must always be greater than or equal to the required collateral.

Voila! The invariant tests helped us catch the bug in our code.

Fixing the Bug

Original withdraw Function

function withdraw(uint256 amount) external {

require(deposits[msg.sender] > 0, "No deposits");

uint256 actualWithdrawal = amount > deposits[msg.sender] ? deposits[msg.sender] : amount;

deposits[msg.sender] -= actualWithdrawal;

totalDeposits -= actualWithdrawal;

require(token.transfer(msg.sender, actualWithdrawal), "Transfer failed");

}

The function does not check if the withdrawal would leave the user's collateral below the required level to secure their borrow.

Fixing the withdraw Function

To fix this, we need to modify the withdraw function to ensure users cannot withdraw collateral that would leave their loans undercollateralized.

function withdraw(uint256 amount) external {

require(deposits[msg.sender] > 0, "No deposits");

// Calculate the required collateral based on current borrows

uint256 requiredCollateral = (borrows[msg.sender] * 100) / COLLATERAL_FACTOR;

require(deposits[msg.sender] >= requiredCollateral, "insufficient collateral");

// Calculate the maximum amount that can be withdrawn

uint256 availableToWithdraw = deposits[msg.sender] - requiredCollateral;

uint256 actualWithdrawal = amount > availableToWithdraw ? availableToWithdraw : amount;

require(actualWithdrawal > 0, "insufficient funds");

deposits[msg.sender] -= actualWithdrawal;

totalDeposits -= actualWithdrawal;

require(token.transfer(msg.sender, actualWithdrawal), "Transfer failed");

}

Before allowing a withdrawal, we calculate the requiredCollateral based on the user's current borrow. Then, we determine availableToWithdraw by subtracting requiredCollateral from the user's deposits. So that the user can only withdraw up to availableToWithdraw. This should ensure that the user maintains sufficient collateral after the withdrawal.

After applying the fix, we rerun the tests and get the following output:

Ran 2 tests for test/invariant/LendingInvariantTest.t.sol:LendingInvariantTest

[PASS] invariant_totalDepositsGreaterThanBorrows() (runs: 256, calls: 128000, reverts: 0)

[PASS] invariant_userCollateralAlwaysSufficient() (runs: 256, calls: 128000, reverts: 0)

Suite result: ok. 2 passed; 0 failed; 0 skipped; finished in 70.86s (86.28s CPU time)

The tests now pass with 0 reverts, confirming that the invariants hold and the bug has been fixed.

[!TIP] You can set the

show_metricsflag totruein your foundry config file to see the call metrics of your invariant tests.[PASS] invariant_totalDepositsGreaterThanBorrows() (runs: 256, calls: 128000, reverts: 0) | Contract | Selector | Calls | Reverts | Discards | |----------|----------|-------|---------|----------| | Handler | borrow | 25440 | 0 | 0 | | Handler | deposit | 25753 | 0 | 0 | | Handler | repay | 25653 | 0 | 0 | | Handler | withdraw | 25766 | 0 | 0 |You can see the no.of calls to each method in our Handler contract.

How the Invariant Test Helped Find the Bug

The invariant test was crucial in detecting the subtle bug in the withdraw function. Here's how it helped:

- Automated Detection: The invariant test automatically ran numerous sequences of user interactions, simulating real-world usage patterns.

- Sequence Reproduction: It provided the exact sequence of actions that led to the invariant violation, making it easier to reproduce and analyze the bug.

Final Thoughts

This example highlights the importance of invariant testing in smart contract development:

- Detecting Edge Cases: Invariant tests can uncover issues that may not be evident through standard unit tests, especially with extreme values or unusual sequences of actions.

- Ensuring Protocol Safety: By continuously checking critical conditions, invariant tests help ensure the protocol remains secure under all circumstances.

- Facilitating Debugging: Providing detailed logs and sequences aids developers in quickly pinpointing and fixing bugs.

By incorporating invariant testing into the development process, we enhance the robustness and reliability of smart contracts, making them safer for users.

Resources:

- https://mirror.xyz/horsefacts.eth/Jex2YVaO65dda6zEyfM_-DXlXhOWCAoSpOx5PLocYgw

- https://allthingsfuzzy.substack.com/p/creating-invariant-tests-for-an-amm

- https://book.getfoundry.sh/forge/invariant-testing#invariant-testing

Differential Testing

Differential testing is quite interesting. It's a testing technique where multiple implementations of the same specification are compared against each other. You can think of it as something similar to back-to-back testing or A/B testing from the web2 world. The key goal is to identify differences in behaviours under the same inputs to diagnose the defect in one or more implementations.

In differential testing:

- The implementation in addition to solidity implementation requires to have at least one more other implementation.

- The inputs which are fed to both the implementations are same.

- We compare the output or behavior to check for a difference.

- We would investigate whether the differences are caused by bugs or are acceptable.

Differential testing is particularly beneficial in some scenarios.

- Identifying edge cases for protocols that have a complex / math heavy logic.

- It serves as a form of cross-verification, increasing confidence in the correctness of the contract.

Example: Computing the Nth Fibonacci Number

To illustrate differential testing, let's consider a simple example of computing the nth Fibonacci number using Solidity and comparing the behaviour against the Rust implementation. By implementing the same algorithm in both languages and comparing the outputs for a range of inputs, we can validate the correctness of our implementations.

Solidity Implementation

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

contract Fibonacci {

function fib(uint n) public pure returns (uint) {

require(n >= 0, "Input must be non-negative");

if (n == 0) return 0;

uint a = 0;

uint b = 1;

for (uint i = 1; i < n; i++) {

uint c = a + b;

a = b;

b = c;

}

return b;

}

}

Rust Implementation

fn fib(n: u32) -> u32 { assert!(n >= 0, "Input must be non-negative"); if n == 0 { return 0; } let mut a = 0; let mut b = 1; for _ in 1..n { let c = a + b; a = b; b = c; } b } fn main() { let result = fib(10); println!("The 10th Fibonacci number is {}", result); }

To test this in Foundry, we can use the vm.ffi() cheatcode. It allows us to call external programs or scripts from within Solidity tests. This feature is quite useful in this case, as it enables us to run arbitrary command to implement advanced and complex testing patterns like this one.

The vm.ffi() cheatcode accepts an array of strings where:

- The first element is the path to the external program or script you want to execute.

- The subsequent elements are the arguments to pass to the program or script.

- The output of the command is returned as a

bytesobject, which can be decoded into the desired type (e.g.,uint,string).

You can find more details about the ffi cheatcode here.

Here’s an expanded example of a test file using FFI to compare the Fibonacci computation in Solidity and Rust:

// test/FibonacciTest.t.sol

pragma solidity ^0.8.24;

import "forge-std/Test.sol";

import "../contracts/Fibonacci.sol";

contract FibonacciTest is Test {

Fibonacci private fibonacci;

string private constant RUST_BINARY = "./target/release/fibonacci";

// Define test cases with expected outputs

struct TestCase {

uint input;

uint expectedOutput;

}

TestCase[] private testCases;

/// @notice Sets up the contract before each test.

function setUp() public {

fibonacci = new Fibonacci();

// Initialize test cases with known Fibonacci numbers

testCases.push(TestCase(0, 0));

testCases.push(TestCase(1, 1));

testCases.push(TestCase(2, 1));

testCases.push(TestCase(3, 2));

testCases.push(TestCase(4, 3));

testCases.push(TestCase(5, 5));

testCases.push(TestCase(6, 8));

testCases.push(TestCase(7, 13));

testCases.push(TestCase(8, 21));

testCases.push(TestCase(9, 34));

testCases.push(TestCase(10, 55));

}

/// @notice Tests the Fibonacci implementation using predefined test cases

function testFibonacciWithTestCases() public {

for (uint i = 0; i < testCases.length; i++) {

TestCase memory tc = testCases[i];

// Test Solidity implementation

uint solResult = fibonacci.fib(tc.input);

assertEq(

solResult,

tc.expectedOutput,

string.concat(

"Solidity implementation failed for input: ",

vm.toString(tc.input)

)

);

// Test Rust implementation via FFI

string[] memory inputs = new string[](2);

inputs[0] = RUST_BINARY;

inputs[1] = vm.toString(tc.input);

bytes memory ffiResult = vm.ffi(inputs);

uint rustResult = abi.decode(ffiResult, (uint));

assertEq(

rustResult,

tc.expectedOutput,

string.concat(

"Rust implementation failed for input: ",

vm.toString(tc.input)

)

);

// Compare Solidity and Rust implementations

assertEq(

solResult,

rustResult,

string.concat(

"Mismatch between Solidity and Rust results for input: ",

vm.toString(tc.input)

)

);

}

}

}

If all outputs match, we gain confidence in the correctness of both implementations. If discrepancies occur, they may indicate a bug in one of the implementations or an issue with integer overflow, especially in languages or environments with different integer size limits.

The above is the simplest form of differential testing. In the fibonacci example, we limited ourself with the set of inputs and outputs which is not much effective. We can make it more effective by exposing the methods to the fuzzer to make sure the implementation is robust enough. This is where Differential Fuzzing comes into picture.

Differential Fuzz Testing

Differential fuzzing is a testing technique that involves executing different implementations of the same function or logic and comparing the results. This technique allows us to verify that the different implementations are equivalent and behave consistently, even when provided with unexpected, invalid, or random inputs. This is different from normal fuzzing which typically tests a single implementation by feeding it a wide range of inputs and monitoring for unexpected behavior, crashes, or security vulnerabilities.

For example here's a script from EnbangWu to test different widely used solidity math libraries In this project, in which they performed differential fuzzing on different fixed-point libraries (OpenZeppelin, Solmate, Solady and prb-math). they found broad compatibility among these libraries, with some differences in handling edge cases and gas efficiency.

function test_diffMulDivUp(uint256 x, uint256 y, uint256 z) public {

if (y > 1) {

x = x % ((type(uint256).max / y) + 1);

}

if (z > 0) { // assume that the divisor is not zero

uint256 ozResult = instance.OzMulDivUp(x, y, z);

uint256 soladyResult = instance.soladyMulDivUp(x, y, z);

uint256 solmateResult = instance.solmateMulDivUp(x, y, z);

require(

ozResult == soladyResult && soladyResult == solmateResult

);

}

}

function test_diffMulWadUp(uint256 x, uint256 y) public {

if (y > 1) {

x = x % ((type(uint256).max / y) + 1);

}

uint256 solmateResult = instance.solmateMulWadUp(x, y);

uint256 soladyResult = instance.soladyMulWadUp(x, y);

require(

solmateResult == soladyResult

);

}

It helps verify the correctness as well as gas efficiency of the libraries.

| Function Name | OpenZeppelin | Solady | Solmate | PRB-Math |

|---|---|---|---|---|

log2 | 677 | 546 | N/A | N/A |

log2Up | 796 | 638 | N/A | N/A |

mulDivDown | 674 | 504 | 500 | 581 |

mulDivUp | 809 | 507 | 526 | N/A |

sqrt | 1146 | 683 | 685 | 977 |

divWadUp | N/A | 500 | 525 | N/A |

mulWadUp | N/A | 519 | 525 | N/A |

Things to keep in mind:

- It is important that same datatypes are used across implementations to avoid discrepancies

- Keep in mind the boundary conditions and edge cases in your inputs.

- Keep an eye out for exceptions, not just the comparison of outputs but also any reverts or any other odd behavior.

- Testing using math heavy/complex functions this technique should be sufficient rather than testing all the methods.

Conclusion

Differential testing is quite a useful technique for enhancing the reliability and security of the math heavy logic. As it compares multiple implementations of the same functionality, we can easily spot bugs that might have gone unnoticed by basic tests. When combined with fuzz testing, this approach becomes even more robust, automatically exploring a wide range of inputs and conditions.

In the high-stakes environment of smart contracts, employing differential testing on top of other tests contributes significantly to building trustworthy and secure protocols. As our ecosystem continues to grow, integrating these testing practices will be crucial for developers aiming to deliver robust and reliable smart contracts.

References

- VRGDA Differential Testing by transmissions11

- Differential Fuzzing Report by Enbang Wu

- Verifying the correctness of Solidity Merkle Tree implementation

Lifecycle Tests

To re-iterate: smart contracts are unique software entities that, once deployed, often control significant financial assets and execute critical business logic autonomously. Unlike traditional software that can be patched or updated easily, smart contracts require careful verification of their entire operational lifespan. This chapter explores lifecycle testing, a comprehensive approach to ensuring smart contracts behave correctly throughout their existence.

[!INFO] I first discovered lifecycle tests for smart contracts from the maple-core-v2 repo. They have one of the best test suites out there. I became a fan of it since then.

Lifecycle tests are advanced from of end-to-end test which is designed to validate the behavior of a smart contract throughout its entire lifecycle. They ensure that the contract behaves correctly over time, especially as it moves through different states and handles a sequence of operations that might occur during its lifespan. The main goal is to verify that the contract maintains integrity and correctness throughout all possible state changes.

In smart contracts, this "life story" includes several critical phases:

- Deployment and initialization

- Configuration and setup

- Active operation period

- State transitions and upgrades

- Emergency scenarios

For example, consider a token vesting contract. Its lifecycle begins when deployed, progresses through initialization where beneficiaries and schedules are set, enters an active phase where tokens gradually vest, handles claims throughout its life, and eventually completes when all tokens are distributed. Each of these phases must be thoroughly tested to ensure the contract behaves correctly throughout its existence.

Why not unit/integration tests?

While unit tests focus on individual functions and integration tests verify component interactions, lifecycle tests examine the evolution of contract's state and behavior during every stage. Think of it this way:

- Unit Tests are like checking individual car parts - the engine, wheels, brakes - in isolation.

- Integration Tests verify these parts work together - the engine powers the wheels, brakes stop the car.

- Lifecycle Tests ensure the car performs correctly throughout its entire lifespan - from factory assembly to years of operation.

Common Contract Lifecycle Patterns

Smart contracts often follow predictable lifecycle patterns based on their purpose:

- Time-Based Progression: Contracts that mature or evolve based on time, like vesting schedules or escrow. These contracts transition through states based on temporal triggers.

- User-Driven Evolution: Contracts that progress based on user actions, like governance systems where proposal submission and voting drive state changes.

- Event-Triggered Changes: Contracts that respond to external events or oracle data, transitioning states based on market conditions or other triggers.

Example #1: Lending Contract

Here's a quick example for Event Triggered changes. Let's use our previously implemented advanced lending contract that allows users to deposit collateral, borrow against it, and repay loans with a newly added liquidate() method for this example since the contract's state changes based on user actions and market conditions (price changes).

contract LendingWithLiquidation is AdvancedLending {

uint256 public constant LIQUIDATION_THRESHOLD = 850; // 85% of collateral value

uint256 public constant LIQUIDATION_BONUS = 50; // 5% bonus for liquidators

uint256 public price; // Price of the token in USD (18 decimal places)

...

...

// basic health check and liquidation method

function liquidate(address borrower, uint256 amount) external {

uint256 borrowerDebt = borrows[borrower];

if (borrowerDebt == 0) revert NoDebtToLiquidate();

uint256 collateralValue = (deposits[borrower] * price * COLLATERAL_FACTOR) / 1000 / 1e18;

if (borrowerDebt * 1000 <= collateralValue * LIQUIDATION_THRESHOLD) revert PositionNotLiquidatable();

uint256 maxLiquidation = (borrowerDebt * LIQUIDATION_THRESHOLD) / 1000;

uint256 actualLiquidation = amount > maxLiquidation ? maxLiquidation : amount;

uint256 collateralToLiquidate = (actualLiquidation * 1e18 * 1000) / (price * COLLATERAL_FACTOR);

uint256 liquidationBonus = (collateralToLiquidate * LIQUIDATION_BONUS) / 1000;

uint256 totalCollateralToLiquidator = collateralToLiquidate + liquidationBonus;

if (deposits[borrower] < totalCollateralToLiquidator) revert InsufficientCollateral();

if (!token.transferFrom(msg.sender, address(this), actualLiquidation)) revert TransferFailed();

borrows[borrower] -= actualLiquidation;

totalBorrows -= actualLiquidation;

deposits[borrower] -= totalCollateralToLiquidator;

totalDeposits -= totalCollateralToLiquidator;

if (!token.transfer(msg.sender, totalCollateralToLiquidator)) revert TransferFailed();

}

}

Here's an example of how a lifecycle test for this contract might look using Foundry:

function testLendingLifecycle() public {

console.log("Step 1: User deposits tokens");

vm.startPrank(user);

token.approve(address(lending), 1000 ether);

lending.deposit(1000 ether);

vm.stopPrank();

assertEq(lending.deposits(user), 1000 ether);

assertEq(lending.totalDeposits(), 1000 ether);

console.log("Step 2: User borrows against collateral");

vm.prank(user);

lending.borrow(700 ether);

assertEq(lending.borrows(user), 700 ether);

assertEq(lending.totalBorrows(), 700 ether);

console.log("Step 3: Attempt to borrow more than allowed");

vm.expectRevert("Exceeds borrow limit");

vm.startPrank(user);

lending.borrow(150 ether);

vm.stopPrank();

console.log("Step 4: Partial repayment");

vm.startPrank(user);

token.approve(address(lending), 200 ether);

lending.repay(200 ether);

vm.stopPrank();

assertEq(lending.borrows(user), 500 ether);

assertEq(lending.totalBorrows(), 500 ether);

console.log("Step 5: Withdraw some funds");

vm.prank(user);

lending.withdraw(100 ether);

assertEq(lending.deposits(user), 900 ether);

assertEq(lending.totalDeposits(), 900 ether);

console.log("Step 6: Set up for liquidation");

vm.prank(user);

lending.borrow(200 ether);

assertEq(lending.borrows(user), 700 ether);

console.log("Step 7: Price drop, making the position liquidatable");

lending.setPrice(0.8 ether); // 20% price drop

console.log("Step 8: Liquidator attempts to liquidate");

uint256 liquidatorBalanceBefore = token.balanceOf(liquidator);

vm.startPrank(liquidator);

token.approve(address(lending), 300 ether);

lending.liquidate(user, 300 ether);

vm.stopPrank();

uint256 liquidatorBalanceAfter = token.balanceOf(liquidator);

assertLt(lending.borrows(user), 700 ether);

assertLt(lending.deposits(user), 900 ether);

assertGt(liquidatorBalanceAfter, liquidatorBalanceBefore);

console.log("Liquidator balance before:", liquidatorBalanceBefore);

console.log("Liquidator balance after:", liquidatorBalanceAfter);

console.log(

"Collateral received by liquidator:",

liquidatorBalanceAfter - liquidatorBalanceBefore

);

console.log("Step 9: User repays remaining debt");

uint256 remainingDebt = lending.borrows(user);

vm.startPrank(user);

token.approve(address(lending), remainingDebt);

lending.repay(remainingDebt);

vm.stopPrank();

assertEq(lending.borrows(user), 0);

console.log("Step 10: User withdraws remaining collateral");

uint256 remainingDeposit = lending.deposits(user);

vm.prank(user);

lending.withdraw(remainingDeposit);

assertEq(lending.deposits(user), 0);

assertEq(lending.totalBorrows(), 0);

assertEq(lending.totalDeposits(), 0);

assertLt(token.balanceOf(user), INITIAL_BALANCE);

assertGt(token.balanceOf(liquidator), INITIAL_BALANCE);

}

Sample output:

Step 1: User deposits tokens

Step 2: User borrows against collateral

Step 3: Attempt to borrow more than allowed

Step 4: Partial repayment

Step 5: Withdraw some funds

Step 6: Set up for liquidation

Step 7: Price drop, making the position liquidatable

Step 8: Liquidator attempts to liquidate

Liquidator balance before: 10000000000000000000000

Liquidator balance after: 10192187500000000000000

Collateral received by liquidator: 192187500000000000000

Step 9: User repays remaining debt

Step 10: User withdraws remaining collateral

As you can see the above test demonstrates a complete lifecycle of the lending flow from deposit till withdrawal including the liquidation.

- The user puts some money in (deposits).

- Borrows some money.

- Then tries to borrow too much and get told "no".

- He pays back some of what he borrowed.

- He takes out a bit of what was originally put in.

- Again borrow some more.

- The value of the collateral drops (market crash).

- Liquidator comes in and liquidates part of his position.

- The user pays off the rest of what they owe.

- They take out whatever they have left and leave.

At each step, we're checking that everything works as it should. It's like making sure all the gears in a machine are turning correctly as we put it through its paces.

This test is quite effective because it doesn't just check one thing at a time. Instead, it looks at how everything works together, just like it would in the real world. It helps us catch problems that might only show up after a bunch of different things happen one after another. This lifecycle test demonstrates how the contract's state changes in response to various user actions (events), and how these changes affect subsequent actions. It's crucial to test these event-triggered changes comprehensively to ensure the contract behaves correctly throughout its entire lifecycle.

Let's look into another detailed example for time-based progression 👇

Example #2: Token Vesting Contract

Let's take an example of a vesting contract (time-based progression) and see how to implement lifecycle tests for the same.

The token vesting contract manages the gradual release of tokens to beneficiaries over time.

Let's examine its core requirements and states: